My team releases their software in ‘release trains’ of four weeks. Before we actually release the product, we run a (exploratory) regression test. To minimise the lead-time and to maximise ownership of the team, we wanted so shift away from relying too much on a specialized tester. So our challenge was: how can we run our pre-release tests efficiently and distributed over the team members, while keeping track of the progress and securing the functional coverage?

We already had a nifty system in Confluence, in which we had our big list of testing topics and a way to mark them as done/failed/in progress (see my previous blogpost). However, editing a document in Confluence is not easy. Unlike Google Docs or other software, the content jumps around the screen if you toggle from view-mode to edit-mode, forcing you to find the specific line you intended to edit again. And since the list of testing topics was too long to fit on one page, the Product Owner kept complaining that he could not see ‘the progress’ at a glance (this last bit merits a blogpost of its own, but I’ll skip over that for now).

To tackle this we turned to Hiptest, a test management tool I’ll explain in more detail below.

Introduction to Hiptest

For quite a while I’ve been reluctant to move our testing scenarios to Hiptest. The main reason was that Hiptest is geared towards very detailed scripts (and automation), while I want to keep our scenarios vague. Vague scenarios foster exploration instead of walking the exact same path through the application over and over again. Note that what I call ‘testing scenarios’ is mostly an inventory of potential testing topics, with a description of the intended behaviour. Our scenarios do not specify how to perform the actual tests or with what parameters.

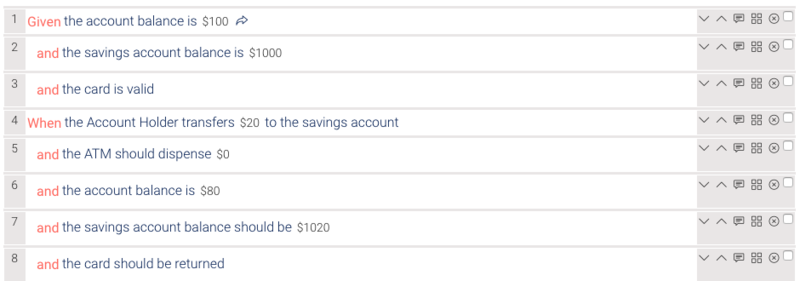

However, I was wrong in my assumption: Hiptest does not actually force you to describe the tests in detailed scripts. Sure, Hiptest is primarily a test management tool geared towards running automated tests (‘checks’, I’d prefer to say). Its sample projects all show scripts for that are written in the Given-When-Then-format. Below is an example of such a script for a cash withdrawal from an ATM:

This (core) part of Hiptest is not what this blogpost is about: our project already has plenty of unit tests (in the code) and integration tests on the API-level (in Postman), so I see little value in adding Hiptest to that stack.

How we (mis)use Hiptest

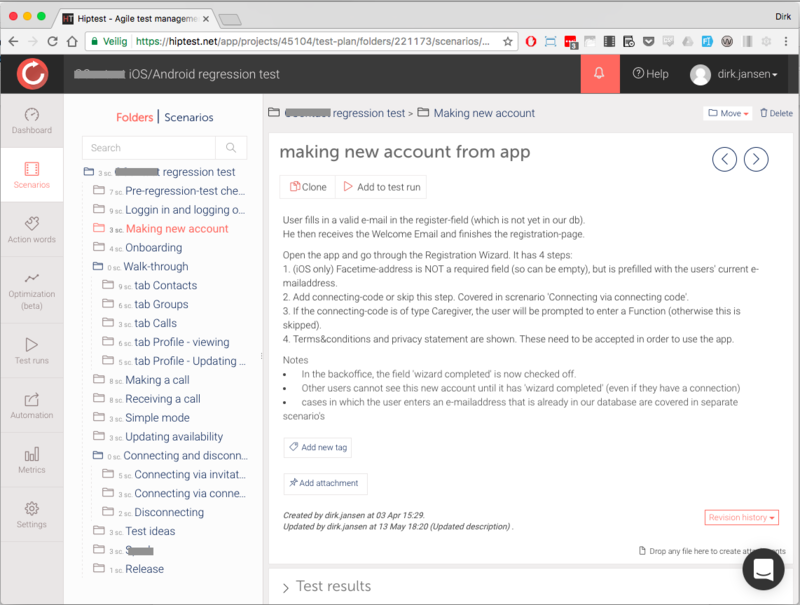

I noticed that Hiptest scenarios also have a field called ‘description’. Not much attention is given to this field in the tutorials, but it proved to be the perfect solution to our problems. It gives us the flexibility to enter any text, while still being able to use functionality like ‘test runs’. I’ll outline our setup first, and discuss how we use test runs afterwards.

Our setup is like this (Hiptest-keywords in bold):

- Folders are used to organise the scenarios in related topics.

- We use the description-field from the scenario-feature to describe the expected behaviour of a piece of functionality, and hints for the tester (if needed). Our scenarios are sometimes quite detailed, but never in the format ‘click here, click there’.

- We use the tags to indicate the priority of each scenario (low/medium/high).

Running a test

When we want to perform a test run, we do the following:

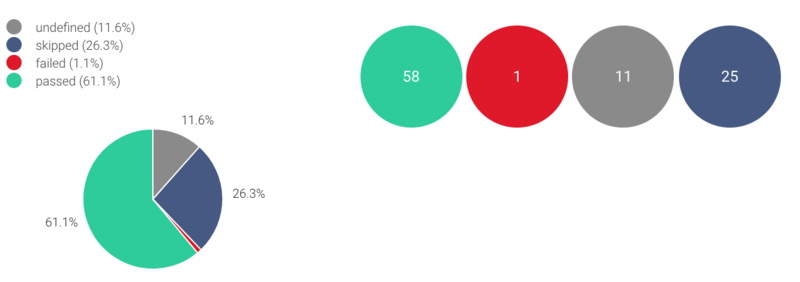

- On the tab ‘Test runs’ we select the scenarios we want to test (for a quick test we’ll only select the ones tagged ‘high’, for a thorough test we’ll select everything) and start the test. Hiptest will now make an test run overview, in which all the scenarios have test status ‘undefined’.

- If we are doing a distributed test (multiple separate testers or test pairs), we’ll tag the scenarios with an identifier (for example: a first name) to mark who is working on it. Note: this is a tag on the scenario ín the test run, not on the scenario itself.

- We use the test status to differentiate between ‘passed’, ‘skipped’ (the tester decided to skip this based on risk assessment), ‘failed’ (a problem was found and should be triaged), ‘blocked’ (the issue was triaged and moved to our ticketing system JIRA) and ‘retest’ (the problem was fixed). Unfortunately Hiptest does not support editing of these labels; but the current labels are good enough for now.

- We iterate this process until the test-status of all scenarios is either ‘passed’, ‘retest’ or ‘skipped’.

During this process, the progress is transparent to everyone involved. In practise, the decline in the number of scenarios that have status ‘undefined’ (meaning: not worked on yet) is a very good indicator of how far along the testing process is even though some scenarios are far simpler then others.

Being clear on what parts of the applications have not been tested yet makes it possible for any team member to jump in and out, switching between fixing important bug and helping with testing.

The number of fails is usually only a handful. But still, the fact that we actually find significant stuff on a regular basis proves the value of this holistic, exploratory testing in addition to the automated checking that is also firmly in place.

Hiptest scenarios as an oracle

One benefit I’d like to especially mention at this point is that by having the Hiptest scenarios available to the whole team, and by working on the tests with the whole team, the scenarios have become an oracle by themselves. If during testing a tester stumbles upon functionality that was not clear, he’ll edit the scenario. And if by implementation of new features the intended behaviour of the application changes, the relevant Hiptest scenarios will be updated as well. This solves a long running debate on how and where to have documentation. We now get our documentation basically for free, and it is accessible to everyone.

Conclusion

We moved from describing testing ideas and scenarios in Confluence, to having them in Hiptest. In doing so we created the following benefits:

- documentation of the product is easy and accessible to all;

- we have clear progress-indication of a testrun;

- all team members can jump in on a testrun.

- So although Hiptest is intended as a test automation tool, it provides excellent value for us without using these features.

Are you interested in sharing thoughts on testing, testing tools and related stuff? Sign up for the testing meetups I organise via the Ministry of Testing Utrecht. Or contact me at dirk.jansen@infi.nl.